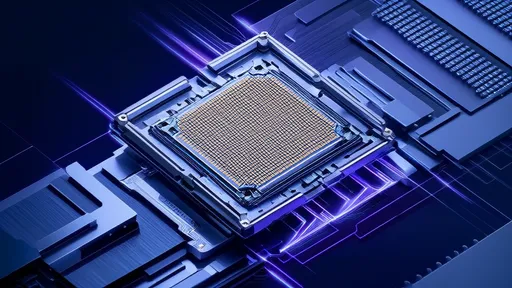

The race to push computing power beyond current limitations has led to the development of 3D chip architectures, where multiple layers of transistors are stacked vertically to maximize performance. However, this advancement comes with a significant challenge: heat dissipation. Traditional cooling methods struggle to keep up with the thermal demands of densely packed 3D chips. Enter microfluidic cooling—a cutting-edge solution that integrates microscopic cooling channels directly into the chip’s structure. This technology promises to revolutionize thermal management in next-generation electronics, but its efficiency and practicality are still under intense scrutiny.

The Promise of Microfluidic Cooling

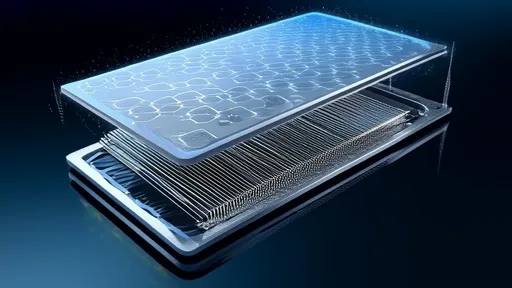

Microfluidic cooling operates on a simple yet ingenious principle: tiny fluid-filled channels are embedded within or between the layers of a 3D chip, allowing coolant to flow in close proximity to heat-generating components. Unlike conventional air cooling or even heat sinks, which rely on passive conduction, microfluidic systems actively remove heat by circulating a liquid coolant. This approach offers several advantages. First, liquids have a higher heat capacity than air, meaning they can absorb and transport more heat per unit volume. Second, the proximity of the coolant to the heat sources reduces thermal resistance, enabling faster and more efficient heat extraction.

Recent studies have demonstrated that microfluidic cooling can achieve heat removal rates exceeding 1,000 watts per square centimeter—far surpassing the capabilities of traditional methods. This level of performance is critical for 3D chips, where heat densities can reach extreme levels due to the compact stacking of transistors. By maintaining lower operating temperatures, microfluidic cooling not only enhances chip reliability but also allows for higher clock speeds and improved energy efficiency. In essence, it unlocks the full potential of 3D chip architectures.

Engineering Challenges and Breakthroughs

Despite its promise, integrating microfluidic cooling into 3D chips is no small feat. One major hurdle is the fabrication of microscale channels without compromising the structural integrity of the chip. Advanced techniques such as silicon etching and 3D printing have been employed to create these intricate networks, but achieving uniformity and reliability at scale remains a challenge. Additionally, the choice of coolant is crucial. While water is a common choice due to its high thermal conductivity, researchers are exploring alternative fluids, including dielectric coolants, to avoid electrical short circuits in case of leaks.

Another critical consideration is the pumping mechanism. Moving fluid through microscopic channels requires precise control to maintain adequate flow rates without excessive energy consumption. Recent innovations in electrokinetic and piezoelectric pumps have shown promise, offering compact and energy-efficient solutions. However, integrating these pumps into the chip package without adding significant bulk or complexity is an ongoing area of research. Engineers are also exploring passive cooling systems that rely on capillary action or phase-change materials to eliminate the need for mechanical pumps altogether.

Real-World Applications and Future Prospects

The potential applications of microfluidic-cooled 3D chips extend far beyond traditional computing. Data centers, for instance, stand to benefit immensely from this technology. With the exponential growth of cloud computing and artificial intelligence, data centers are consuming vast amounts of energy, much of which is spent on cooling. Microfluidic systems could drastically reduce this energy overhead, making data centers more sustainable and cost-effective. Similarly, high-performance computing (HPC) and edge computing devices could leverage this technology to achieve unprecedented levels of performance in compact form factors.

Looking ahead, the integration of microfluidic cooling with emerging technologies like quantum computing and neuromorphic chips could open new frontiers. Quantum systems, in particular, operate at cryogenic temperatures, and microfluidic cooling might offer a more efficient way to maintain these conditions. Meanwhile, the rise of heterogeneous integration—where different types of chips are combined in a single package—further underscores the need for advanced thermal management solutions. As the boundaries of Moore’s Law are tested, microfluidic cooling could play a pivotal role in sustaining the trajectory of semiconductor innovation.

The Road Ahead

While microfluidic cooling for 3D chips is still in its relative infancy, the progress made so far is undeniably exciting. Researchers and engineers are tackling the technical challenges with creativity and rigor, driven by the immense potential of this technology. As fabrication techniques improve and new materials are developed, the barriers to widespread adoption will likely diminish. The collaboration between semiconductor manufacturers, material scientists, and thermal engineers will be key to bringing this technology to maturity.

In the coming years, we can expect to see microfluidic cooling move from the lab to commercial products, first in niche applications and eventually in mainstream electronics. The journey won’t be without obstacles, but the rewards—cooler, faster, and more efficient chips—are well worth the effort. As the demand for computational power continues to soar, microfluidic cooling may very well become the standard for thermal management in the era of 3D integrated circuits.

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025