The realm of artificial intelligence is undergoing a quiet revolution, one that promises to reshape how we deploy machine learning models in resource-constrained environments. At the heart of this transformation lies sparse training for edge AI - an emerging paradigm that challenges conventional wisdom about neural network optimization. Unlike the brute-force approaches dominating cloud-based AI, sparse training embraces efficiency as its guiding principle, creating models that are leaner, faster, and surprisingly more capable when deployed on edge devices.

Traditional AI models have followed a path of increasing complexity, with billions of parameters becoming commonplace in state-of-the-art architectures. This approach works well when unlimited computational resources are available in data centers, but fails spectacularly when facing the harsh realities of edge deployment. Sparse training flips this script by systematically identifying and eliminating redundant connections within neural networks during the training phase itself. The result? Models that maintain accuracy while dramatically reducing memory footprint and computational demands.

The magic of sparse training lies in its ability to mimic the efficiency of biological neural networks. Just as the human brain doesn't maintain full connectivity between all neurons, sparse AI models learn to prioritize the most important connections. Researchers have discovered that typical neural networks are massively over-parameterized, with only a small fraction of connections contributing significantly to model performance. By focusing computational resources on these critical pathways, sparse training achieves remarkable efficiency gains without sacrificing accuracy.

Edge devices present unique challenges that make sparse training particularly compelling. From smartphones to industrial IoT sensors, these devices operate under strict power constraints with limited memory and processing capabilities. A conventionally trained model might struggle with real-time inference under such conditions, but a sparsely trained equivalent can deliver responsive performance while sipping rather than gulping power. This difference becomes crucial when deploying AI across thousands of edge devices where energy efficiency translates directly to operational costs and battery life.

Implementation strategies for sparse training vary across research teams and commercial implementations. Some approaches begin with dense networks and progressively prune connections during training, while others maintain sparsity throughout the entire learning process. The most advanced methods incorporate dynamic sparsity patterns that adapt to different input types, effectively creating models that reconfigure their architecture based on the complexity of each specific task. This adaptability proves particularly valuable in edge environments where input data characteristics may vary significantly.

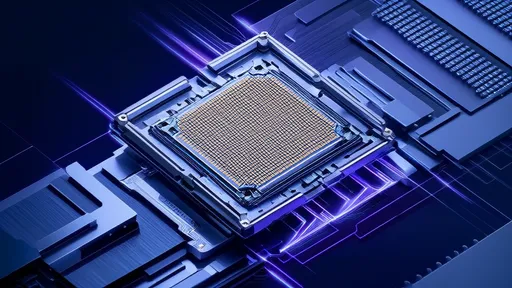

The hardware implications of sparse training are equally fascinating. Traditional AI accelerators were designed for dense matrix operations, often wasting precious cycles multiplying numbers by zero. A new generation of processors is emerging specifically optimized for sparse computations, with architectural features that skip zero-value operations entirely. When paired with sparsely trained models, these chips deliver performance-per-watt figures that were unimaginable just a few years ago, opening new possibilities for always-on AI in power-sensitive applications.

Real-world applications are beginning to demonstrate the transformative potential of this technology. In healthcare, sparse models enable complex diagnostics on wearable devices without compromising patient privacy through cloud uploads. Automotive systems leverage sparse training to run sophisticated driver-assistance algorithms locally, reducing latency-critical dependencies on cellular connections. Even consumer electronics benefit, with smartphones using sparse models to deliver camera enhancements and voice recognition that work reliably without an internet connection.

Despite these advances, challenges remain in bringing sparse training into mainstream adoption. The training process itself requires careful tuning to prevent premature pruning of important connections, and not all network architectures respond equally well to sparsification. Researchers are actively working on more robust algorithms that can maintain model quality across diverse applications while achieving higher sparsity ratios. The field is also developing better metrics to evaluate the true efficiency gains when accounting for both training and inference phases.

The environmental impact of sparse training shouldn't be overlooked. As AI adoption grows exponentially, the energy demands of training and running massive models have raised legitimate sustainability concerns. Sparse models offer a path to dramatically reduce this footprint, potentially saving megawatts of power across global AI infrastructure. When multiplied across millions of edge devices, these efficiency gains could meaningfully contribute to reducing the technology sector's carbon emissions.

Looking ahead, the convergence of sparse training techniques with other edge AI innovations promises even greater breakthroughs. Combining sparsity with quantization, knowledge distillation, and neural architecture search creates models that push the boundaries of what's possible in resource-constrained environments. As these techniques mature, we may see a fundamental shift in how AI systems are developed - with efficiency becoming a primary design consideration rather than an afterthought.

The story of sparse training for edge AI is still being written, but its early chapters suggest a future where powerful artificial intelligence becomes truly ubiquitous. By moving beyond the "bigger is better" mentality that has dominated AI development, researchers and engineers are unlocking new possibilities for intelligent systems that work within the constraints of the physical world. This isn't just about making existing models smaller - it's about reimagining how we build AI from the ground up to thrive at the edge.

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025