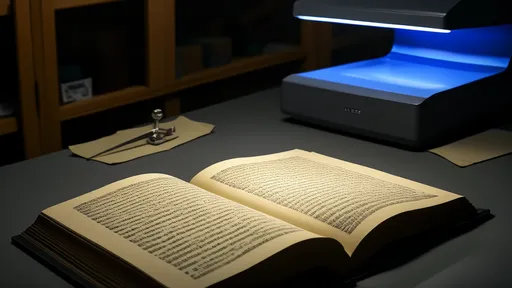

The world of cultural heritage preservation has entered a new era with the advent of terahertz scanning technology. This groundbreaking approach is revolutionizing how we interact with ancient manuscripts, offering unprecedented access to texts that were previously illegible or too fragile to handle. Unlike conventional methods, terahertz waves can penetrate layers of damage and degradation without causing harm to the delicate materials.

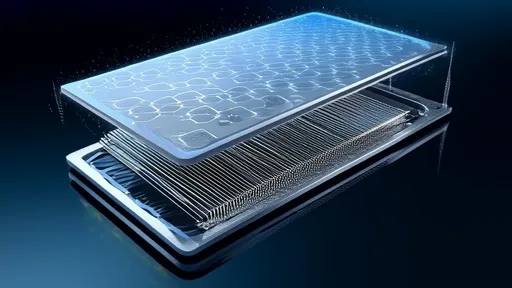

At the heart of this innovation lies the unique properties of terahertz radiation. Occupying the electromagnetic spectrum between microwaves and infrared light, these waves interact with materials in ways that reveal hidden information. When applied to ancient documents, the technology can distinguish between ink and parchment, detect overwritten texts, and even identify the chemical composition of materials used centuries ago. Researchers are now able to uncover palimpsests—manuscripts where original writing was scraped off to reuse the parchment—with remarkable clarity.

Cultural institutions worldwide are taking notice of terahertz scanning's potential. The British Library has begun experimenting with the technology to examine medieval manuscripts, while the Vatican Library is exploring its use for analyzing ancient religious texts. In China, archaeologists have employed terahertz scanners to study bamboo slips from the Warring States period, revealing philosophical texts that had been lost to time. These applications demonstrate how the technology bridges the gap between preservation and accessibility.

The process of terahertz scanning involves sophisticated equipment that emits controlled pulses of radiation. As these pulses interact with the manuscript, detectors measure the reflected signals, creating detailed three-dimensional maps of the document's structure. Advanced algorithms then reconstruct these data points into readable images, separating layers of text that have merged over centuries. What makes this approach particularly valuable is its non-invasive nature—precious artifacts remain untouched during the entire procedure.

One of the most exciting aspects of terahertz technology is its ability to reveal the "biography" of manuscripts. By analyzing the different layers of writing and materials, researchers can trace how documents were altered, reused, or repaired throughout history. This forensic capability provides insights not just into the texts themselves, but into the cultural practices of the societies that produced them. A single scan might show how a medieval prayer book was created from repurposed classical texts, telling a story of resource scarcity and changing religious priorities.

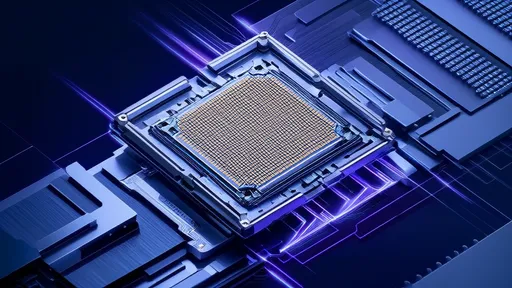

Despite its promise, terahertz scanning faces several challenges that researchers are working to overcome. The equipment remains expensive and requires specialized operators, limiting its accessibility to well-funded institutions. Additionally, the interpretation of scan data demands expertise in both physics and paleography—a rare combination of skills. There are also physical limitations; very thick or metal-containing materials can interfere with the scanning process. However, ongoing advancements in machine learning and imaging technology are steadily addressing these obstacles.

The implications for scholarship are profound. Historians can now access texts that were previously considered lost, potentially rewriting our understanding of certain historical periods. Linguists gain new corpora of ancient languages to study, while conservationists obtain detailed information about material degradation that informs better preservation strategies. Perhaps most importantly, the technology allows these discoveries to happen without risking damage to irreplaceable cultural treasures.

Looking ahead, the integration of terahertz scanning with other technologies promises even greater breakthroughs. Researchers are exploring combinations with multispectral imaging and artificial intelligence to enhance the interpretation of scan results. There's also potential for developing portable terahertz scanners that could be used at archaeological sites, bringing the laboratory to the artifacts rather than vice versa. As these technologies mature, we may be on the verge of a new golden age of manuscript discovery and analysis.

Ethical considerations naturally accompany such powerful technology. Institutions must balance the desire for knowledge with respect for the physical integrity of artifacts. There are also questions about intellectual property rights when previously unknown texts are discovered. The international community continues to develop guidelines for the responsible use of terahertz scanning in cultural heritage contexts, ensuring that this remarkable tool serves the cause of knowledge without compromising preservation ethics.

The story of terahertz scanning for ancient manuscripts is still being written, much like the palimpsests it reveals. As the technology progresses, each new application uncovers another layer of human history waiting to be rediscovered. From monastic libraries to desert caves preserving dead sea scrolls, this innovative approach is opening windows to the past that were previously thought permanently shuttered. The whispers of ancient scribes, long silenced by time, are finding new voices through the marriage of cutting-edge physics and timeless human curiosity about our shared heritage.

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025