The rapid evolution of enterprise IT infrastructure has brought hyperconverged infrastructure (HCI) into the spotlight, particularly when combined with GPU virtualization. This powerful pairing is reshaping how organizations deploy, manage, and scale their computational resources, especially in fields requiring high-performance computing like artificial intelligence, machine learning, and advanced analytics.

At its core, hyperconverged infrastructure integrates compute, storage, and networking into a single software-defined solution. When GPU virtualization is layered onto this architecture, it unlocks unprecedented flexibility in resource allocation. Data centers can now dynamically provision GPU resources across multiple virtual machines or containers, breaking down the traditional barriers of physical GPU allocation.

The marriage of HCI and GPU virtualization solves several persistent challenges in modern computing environments. Traditional GPU deployments often led to resource silos, where expensive GPU capacity sat idle when not fully utilized by a single application. With virtualization, these powerful processing units can be shared efficiently across multiple workloads, dramatically improving return on investment for hardware that typically carries significant acquisition costs.

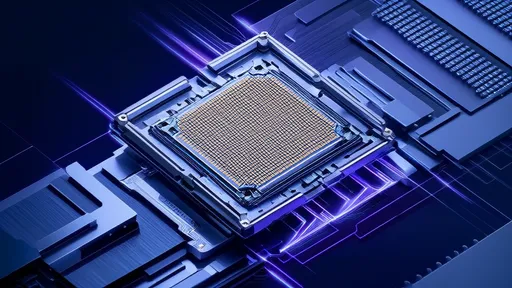

Implementation of GPU virtualization in hyperconverged environments requires careful consideration of several technical factors. The virtualization layer must maintain near-native performance while ensuring proper isolation between workloads. Modern solutions achieve this through advanced scheduling algorithms and memory management techniques that minimize the performance overhead typically associated with virtualization.

Security remains a paramount concern when virtualizing GPU resources. Multi-tenant environments demand robust isolation mechanisms to prevent data leakage or interference between workloads. Leading HCI providers have implemented sophisticated security protocols that extend to virtualized GPU environments, including encrypted memory spaces and strict access controls.

The benefits of this combined approach extend beyond simple resource sharing. Administrators gain centralized management capabilities for both traditional computing resources and GPU acceleration through a single interface. This unified management significantly reduces operational complexity compared to maintaining separate infrastructure stacks for CPU and GPU workloads.

Performance optimization in these environments presents unique challenges. Workloads requiring GPU acceleration often have specific performance characteristics that differ from traditional computing tasks. The hyperconverged platform must intelligently balance resources between CPU, memory, storage, and GPU components to prevent bottlenecks that could negate the benefits of acceleration.

Real-world deployments demonstrate the transformative potential of this technology combination. In healthcare, research institutions are using virtualized GPU resources in HCI environments to accelerate medical imaging analysis while maintaining strict patient data isolation. Financial services firms leverage the same technology for real-time fraud detection across thousands of simultaneous transactions.

The evolution of GPU virtualization technologies continues to push the boundaries of what's possible in hyperconverged environments. Recent advancements include support for GPU live migration, allowing workloads to move between physical hosts without service interruption. This capability brings new levels of flexibility to disaster recovery scenarios and workload balancing.

Looking ahead, the convergence of HCI and GPU virtualization appears poised for continued growth. As workloads become increasingly dependent on parallel processing capabilities, the demand for flexible, scalable GPU resources will only intensify. The hyperconverged approach offers a path forward that balances performance, efficiency, and manageability in ways that traditional infrastructure cannot match.

Organizations considering this technology must evaluate their specific workload requirements and growth projections. While the benefits are substantial, successful implementation requires careful planning around workload placement, resource allocation policies, and performance monitoring. The most effective deployments often begin with targeted pilot projects before expanding to broader production environments.

The vendor landscape for HCI with GPU virtualization support continues to evolve rapidly. Established infrastructure providers and newer specialized firms alike are bringing innovative solutions to market. Evaluation criteria should extend beyond simple performance metrics to include management capabilities, ecosystem integration, and the depth of virtualization features offered.

As with any transformative technology, challenges remain in the widespread adoption of GPU virtualization within hyperconverged environments. Some legacy applications may require modification to fully leverage virtualized GPU resources, and certain specialized workloads may still benefit from dedicated physical GPU configurations. However, for the majority of modern computing needs, the combination delivers compelling advantages.

The environmental impact of computing infrastructure has become an increasing concern for organizations worldwide. Here too, HCI with GPU virtualization offers benefits. By improving utilization rates of expensive, power-hungry GPU resources, organizations can reduce their overall energy consumption and physical footprint while maintaining or even increasing computational capacity.

Training and skills development represent another critical consideration. IT teams accustomed to traditional infrastructure may need to develop new competencies to manage these converged, virtualized environments effectively. Forward-looking organizations are investing in training programs to ensure their staff can maximize the potential of these advanced architectures.

The financial implications of adopting HCI with GPU virtualization extend beyond simple hardware cost comparisons. The operational efficiencies gained through simplified management and improved resource utilization often deliver substantial long-term savings that outweigh initial investment costs. Comprehensive total cost of ownership analyses typically reveal advantages that may not be immediately apparent from surface-level evaluations.

Industry standards for GPU virtualization in hyperconverged environments continue to mature. This standardization is critical for ensuring interoperability between components from different vendors and providing organizations with flexibility in building their ideal solutions. Participation in relevant standards bodies can help enterprises stay ahead of these developments.

Ultimately, the combination of hyperconverged infrastructure and GPU virtualization represents more than just another technological advancement. It signals a fundamental shift in how organizations approach computational resource allocation, particularly for demanding workloads that require acceleration. As the technology matures and adoption grows, it may well become the default approach for next-generation computing infrastructure.

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025