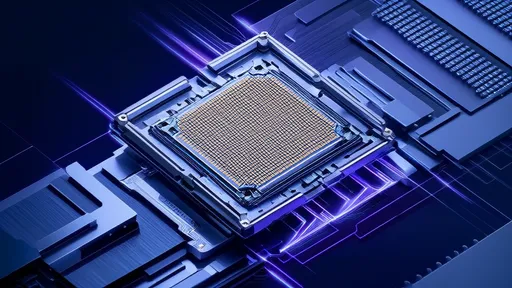

The semiconductor industry is undergoing a paradigm shift as traditional von Neumann architectures face increasing challenges in meeting the demands of modern computing workloads. At the forefront of this transformation lies the emerging field of in-memory computing with mixed-signal architectures, a disruptive approach that promises to redefine how we process data in the post-Moore's Law era.

Breaking the Memory Wall

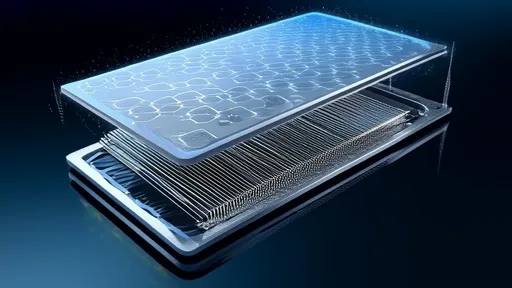

For decades, the separation between processing units and memory has created what engineers call the "memory wall" - the bottleneck caused by shuttling data back and forth between these components. In-memory computing architectures collapse this distinction by performing computations directly within memory arrays, eliminating energy-hungry data movement. The mixed-signal approach combines analog computation in memory with digital logic, creating a hybrid system that leverages the strengths of both domains.

Researchers at leading universities and corporate labs have demonstrated that this architecture can achieve orders-of-magnitude improvements in both energy efficiency and throughput for specific workloads. The secret lies in the physics of memory devices themselves - whether resistive RAM, phase-change memory, or other emerging technologies - which can naturally perform certain mathematical operations as part of their normal functioning.

The Analog Advantage

At the heart of mixed-signal in-memory computing is the recognition that not all computations need the precision of digital logic. Many AI/ML workloads, particularly those involving matrix-vector multiplications common in neural networks, can tolerate analog computation's inherent approximations. By processing these operations in the analog domain within memory arrays, systems can achieve tremendous parallelism while consuming minimal energy.

This approach mirrors biological neural networks where imperfect but massively parallel analog processing leads to remarkable efficiency. The mixed-signal architecture strategically places analog computation where it provides maximum benefit while retaining digital circuitry for operations requiring precision, creating an optimal balance between efficiency and accuracy.

Commercialization Efforts Accelerate

Several startups and established semiconductor companies are now racing to commercialize various implementations of this technology. Some focus on AI accelerators for edge devices, where power constraints make conventional approaches impractical. Others target data center applications, where the energy savings could significantly reduce operational costs for hyperscalers.

The challenges remain substantial - from device variability in analog computation to developing new design tools for these novel architectures. However, the potential rewards justify the investment. Early benchmark results show some implementations delivering 10-100x better energy efficiency than traditional digital ASICs for targeted workloads.

Beyond AI: Broader Applications Emerge

While much of the initial excitement around in-memory computing has focused on AI acceleration, researchers are discovering broader applications. Scientific computing, signal processing, and even certain database operations show promise when implemented on these architectures. The common thread is any computation dominated by data movement or involving large-scale linear algebra operations.

Some of the most intriguing possibilities involve entirely new computing paradigms that blend digital and analog processing in ways that were impractical with traditional architectures. These could enable real-time processing of sensor data, ultra-efficient scientific simulations, and other applications we haven't yet imagined.

The Road Ahead

As with any disruptive technology, the path from lab to mainstream adoption will involve both technical and ecosystem challenges. Standardization efforts are just beginning, and the software stack for these architectures remains in its infancy. However, the fundamental physics advantages suggest that some form of in-memory computing will likely play a significant role in future computing systems.

The semiconductor industry stands at an inflection point where the traditional approaches to improving performance and efficiency are showing diminishing returns. In-memory computing with mixed-signal architectures offers a promising alternative path forward - one that could enable continued progress in an era where Moore's Law no longer delivers its historic benefits. As research progresses and commercialization efforts mature, we may be witnessing the early stages of a computing revolution that will reshape the technological landscape in the coming decade.

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025

By /Jul 29, 2025